Wally got up in the morning, showered, brushed his teeth, and, later, shaved.

|

The system will then study this information and will then attempt to build hypotheses as to how the information can be represented by its internal KR language. A multitude of hypotheses might need put forward:

- "Wally is a person, not the family's pet schnauzer, because animals usually don't shave"

- "The word later probably doesn't mean that he shaved late in the day, since shaving is usually a morning activity"

- "Wally probably got up, showered, and brushed his teeth in succession, not simultaneously"

To represent these hypotheses the systems breaks them into parts: Each hypothesis contains a formal bit of knowledge that can be represented in the internal vocabulary, whereas the rest of the hypothesis is information of a probabilitstic nature. So it might, conceivably, be represented as follows:

|

(hypothesis (is wally person) 0.9)

(hypothesis (time shave morning) 0.6)

(hypothesis (sequential-performed-activities wally (got-up showered brushed-teeth)) 0.8)

|

...where the last number represents the probability that the hypothesis is true. The system would constantly check the internal KR it has for inconsistencies, using a logical reasoning engine to deduce possible new facts from existing bits of knowledge. As new information is received, the system would constantly adjust the probabilities of previous hypotheses (and possibly discard them all together) based on new information. Also, the system constantly needs to maintain some type of context- For instance, if it turns out that the information being analyzed is part of a children's story, then it might want to give less weight to the hypothesis that Wally is definitely a Person and not an Animal. After a text has been completely analyzed, the system would then have, in its internal kr format, a representation of the information in a KR format that it could output or use for other purposes.

...So Why is A.I. so difficult?

Besides the fact that a decent symbolic reasoning system is just ridiculously complex in terms of its architecture to begin with, it has another great problem to deal with: Humans, when writing text or generating other information can draw on a vast amount of "common sense" knowledge to facilitate communication with any potential reader- Like "non-human animals usually don't shave themselves". Any A.I. system needs to duplicate this "common sense" to some degree if it wishes to succeed in comprehend most written text. Building such a database is mostly an "all or nothing" proposition- Although such "common sense" can help you deal with unexpected information, there are just so many different ways that unexpected information can be unexpected- And if you can only handle 40% of the cases, then there will always be some software created by The Guy in the Garage that will outperform your system in a manner that is totally unintelligent- Or maybe people will just read what The Writer has written directly, without using your software ahead of time.

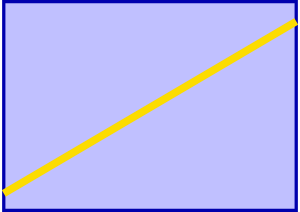

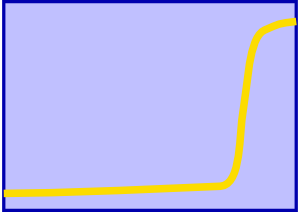

progress over time in most sciences

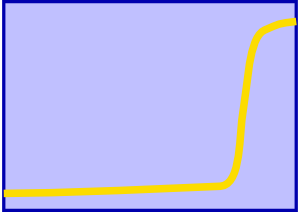

progress in A.I.

Are any more KR ideas in A.I. like Frame Systems and Description Logics That Haven't yet Made The Big Time?

Although most systems in A.I. tend to have frame systems or description logics at heart, some people have been experimenting with more exotic ways of representing knowledge that have not yet been used much outside of the field of A.I.. Most interesting of these try to address the way humans use metaphors and analogies to express ideas- I have not yet seen any example of how such concepts could be practically represented in a description logics. See the book Fluid Concepts and Creative Analogies. I would love to see a system that could do this!

|

RDF and the Semantic Web >>

|

|