|

|

|

|

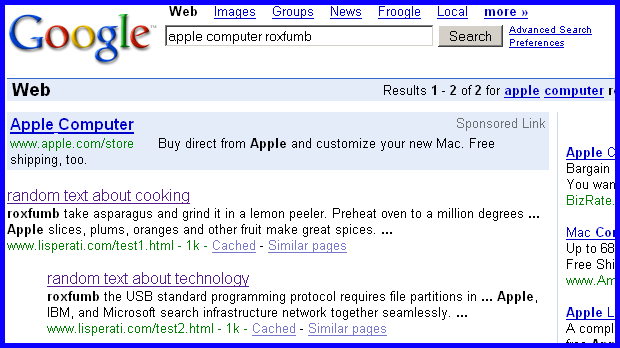

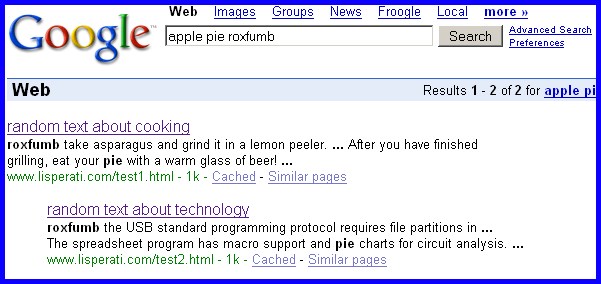

Once Google came into use, they obliterated the these lofty researchers with a brilliant Guy-In-The-Garage search philosophy: "We'll just show you pages that have exactly the words you asked for- No fancy guessing. Plus, we'll sort the results based on site popularity." So far, Google has managed to maintain a perfect balance between pragmatism and science in their technology- By treating web pages mostly as just strings of words, they were able to remain pragmatic and outperform other research engines by being comprehensive and more consistent than their competitors. But now they have the leisure to concentrate on more advanced knowledge systems- That's why it is fairly likely that they (and other survivors of the search wars, like Yahoo and MSN) are returning to more exotic KR systems. At the most basic level, the main problems of a standard search engine revolve around synonyms and homonyms. For instance, if I perform an internet search for the term "Apple", there is no way that the computer system to know whether I intend to find information about fruit apples, or if I intend to find information on the company Apple Computers, as these are homonyms- Two different meanings for the same word. Even if I search in a way that avoids this ambiguity (by searching for "Apple Computers" using quote marks, for instance) it is likely that many of the web pages that refer to the computer company will use the more vague word 'Apple' and will not be captured by my query. Synonyms (two different words that mean the same thing) pose similar problems. As I already indicated, Google has always been a simple brute-force text searching engine at its heart (augmented with some clever ranking technology) that can be easily foiled by synonyms and homonyms... but do we know this for sure? Since the guys at Google are reportedly pretty smart guys/gals, can we find any evidence yet that there is already some more advanced technology under the hood of the Google search engine? I decided to run a simple test to see if I could "peek under the hood" of Google with the hope of finding some evidence of more intelligent textual analysis: Is the Google engine, perchance, able to determine the context of a page in an automated fashion to disambiguate synonyms, for example? To test this idea, I placed two small hyperlinks at the bottom of my website www.lisperati.com that pointed to two carefully designed pages, containing two small fragments of text: Page #1: roxfumb take asparagus and grind it in a lemon peeler. Preheat oven to a million degrees, basting all turkeys as you dice an egg. Apple slices, plums, oranges and other fruit make great spices. Spread butter on a can of soda and slice it into generous figs. After you have finished grilling, eat your pie with a warm glass of beer! You can find great recipies on your computer. Page #2: roxfumbthe USB standard programming protocol requires file partitions in the operating system. Log in to the screen saver. Apple, IBM, and Microsoft search infrastructure network together seamlessly. The word processor has Font kerning issues. The spreadsheet program has macro support and pie charts for circuit analysis. Use a Pentium processor to power your computer. As you can see, these text fragments are basically gibberish without any discernible meaning. However, any human can immediately discern that the first fragment primarily consists of instructions for cooking food, whereas the second fragment is a discussion on computer technology. Also, both pages contain a nonsense word, roxfumb, which is unique throughout the entire internet (at least prior to publication of this website :) as well as three identical vocabulary words: "apple", "computer", and "pie", in roughly identical positions in the text. So here is my question: Once Google has incorporated these pages in its index, how would it behave on the following two queries: Query #1: |

|